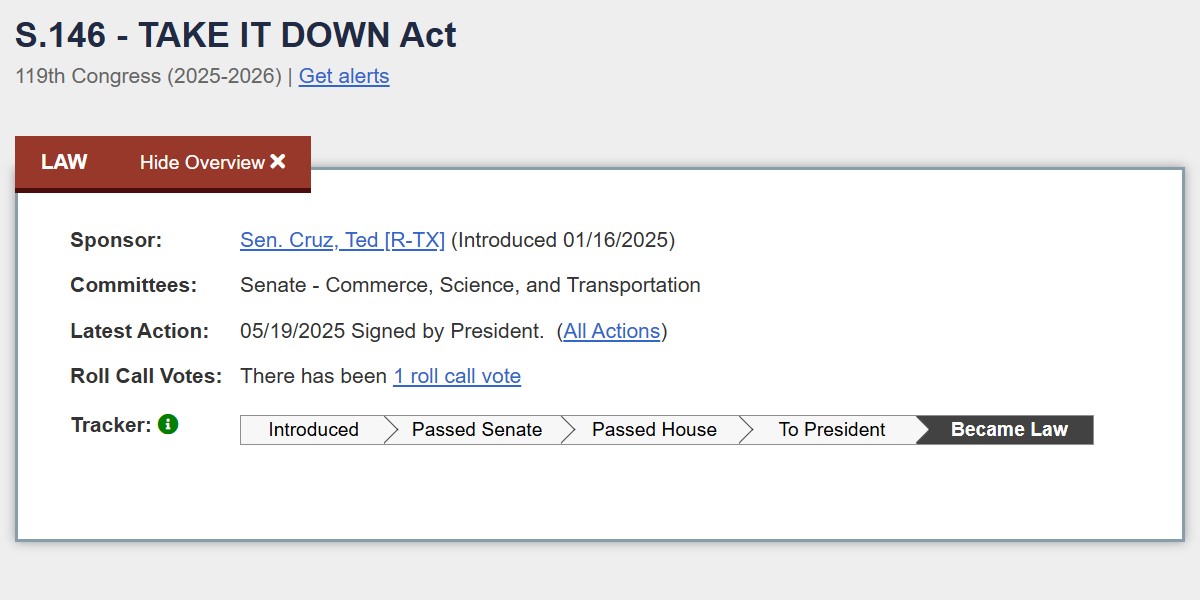

On May 19, 2025, President Donald Trump signed the Take It Down Act during a formal Rose Garden ceremony. The law establishes federal protection for individuals targeted by nonconsensual deepfakes and intimate image abuse.

The event included congressional leaders, survivor advocates, and federal officials, all gathered to mark a sweeping shift in national digital safety policy.

Melania Trump stood beside the President throughout the event. Her direct involvement helped elevate public awareness, especially after private meetings with families affected by online image manipulation. She described the law as a “lifeline” for victims who had no recourse before.

The legislation passed with rare bipartisan force:

- House Vote: 409 to 2

- Senate: Unanimous approval

- Key Sponsors: Senator Ted Cruz (R-TX) and Senator Amy Klobuchar (D-MN)

Their joint remarks emphasized the urgency of acting before deepfake content inflicts further personal and psychological damage.

What the Take It Down Act Forces Platforms to Do

The law sets a firm requirement for digital platforms:

- Content flagged by victims must be removed within 48 hours

- Applies to AI-generated images, videos, or synthetic audio involving real people

- Noncompliance brings legal exposure under federal enforcement

Federal Trade Commission Takes the Lead

The FTC holds new powers under the act:

- Investigate and fine companies that ignore removal deadlines

- Monitor repeat violations across platforms

- Coordinate with state attorneys general to support prosecution

The measure also directs agencies to educate the public on rights and reporting procedures, aiming to close gaps in awareness that left many victims unprotected in the past.

Why Congress Moved Fast to Pass the Bill

Lawmakers cited a surge in exploitative AI content as the final trigger for urgent legislative action. Several recent deepfake incidents involving minors circulated online, prompting rapid outrage and calls for reform.

The most widely known case involved a teenage girl whose synthetic images went viral without consent. Her family testified before Congress earlier in the year.

Growing Threat Posed by AI Image Generators

Advances in generative AI led to:

- Realistic fake images distributed across social media

- Intimate depictions involving public figures, students, and professionals

- Lack of existing legal options for victims in most states

Senators warned that AI had created an environment where digital impersonation could ruin lives without legal consequence. The bill was crafted to interrupt that pattern.

Reactions Across Advocacy and Industry Groups

Groups that have long pushed for stronger digital protections applauded the new law. Statements came from:

All groups welcomed the 48-hour rule as a meaningful step toward restoring agency to victims.

Industry Leaders Respond with Pledges

Several tech companies issued statements supporting the law. Social media platforms pledged to:

- Build faster reporting tools

- Improve transparency in removal decisions

- Expand moderation teams to meet deadlines

The law also raised expectations for prompt internal changes within content-sharing platforms.

Critics Raise Concerns About Enforcement Language

Although the act gained broad support, some digital rights organizations flagged potential flaws. Concerns focused on:

- Ambiguity in defining nonconsensual content

- Risk of overreach in enforcement

- Possible impact on encrypted services

Groups such as the Electronic Frontier Foundation issued warnings about hasty takedowns affecting legal expression, especially in cases involving parody, satire, or anonymous reporting.

Privacy Advocates Urge Caution

Several policy experts argued that enforcement could weaken encryption or chill journalistic speech. They called for:

- Stronger judicial review before takedown orders

- Protections for whistleblower content

- Clarification on what qualifies as synthetic media

Lawmakers signaled openness to future amendments, but emphasized that protecting victims remained the primary goal.

What Happens Next for Platforms and Victims

Victims now have a federally backed process to seek help. Steps include:

- Filing a removal request with the platform

- Escalating complaints to the FTC if ignored

- Seeking civil penalties against noncompliant companies

The law encourages cross-agency cooperation, allowing state prosecutors to pursue cases alongside federal investigators.

Platforms Face Real Deadlines

Online platforms must:

- Log reports and confirm receipt within a strict time frame

- Remove confirmed deepfake content within 48 hours

- Preserve evidence for legal follow-up

The FTC will monitor compliance closely. Repeat violations may lead to serious consequences, including lawsuits and public sanctions.

A Turning Point in Digital Protection

The Take It Down Act marks the first time federal law has directly addressed AI-generated sexual abuse. Lawmakers say it reflects the seriousness of a modern threat that spreads quickly and leaves deep damage. Advocates call it a critical tool for a safer internet, especially for minors and women who have long endured deepfake attacks without legal support.

Public officials and industry leaders now face the responsibility of carrying it forward. Compliance, transparency, and respect for civil liberties will shape how this law changes the online landscape in the months ahead.

For victims who once had no voice, the law sends a message: help is now real, federal, and enforceable.

Read Next – Trump Suspends Student Loan Forgiveness Plans